Lab-Grown Human Brain Embodied in a Virtual World

Our team put a human mini-brain into virtual reality and let people interact with it over the internet. It’s the first time anyone has done this, and we’d like to share the behind-the-scenes with you.

Introduction

Earlier this year, I had the opportunity to work on a project with FinalSpark, a Swiss startup developing the world’s first wetware cloud platform. We created the Neuroplatform, a system that enables researchers and developers to interact remotely with human brain organoids. Our platform essentially allows ’running software’ on biological neural networks (BNNs), with the ultimate goal of achieving and deploying synthetic biological intelligence.

The potential benefits of BNNs over traditional silicon-based artificial neural networks (ANNs) are numerous, though they are mainly theoretical today. Nonetheless, the advantages include significantly lower energy consumption (the human brain operates on only about 20 watts), truly higher cognitive and adaptive behaviour such as creativity, true zero-shot learning capabilities, superior pattern recognition and generalisation, better handling of ambiguity and noise, and the potential for self-repair and neuroplasticity.

What is a brain organoid?

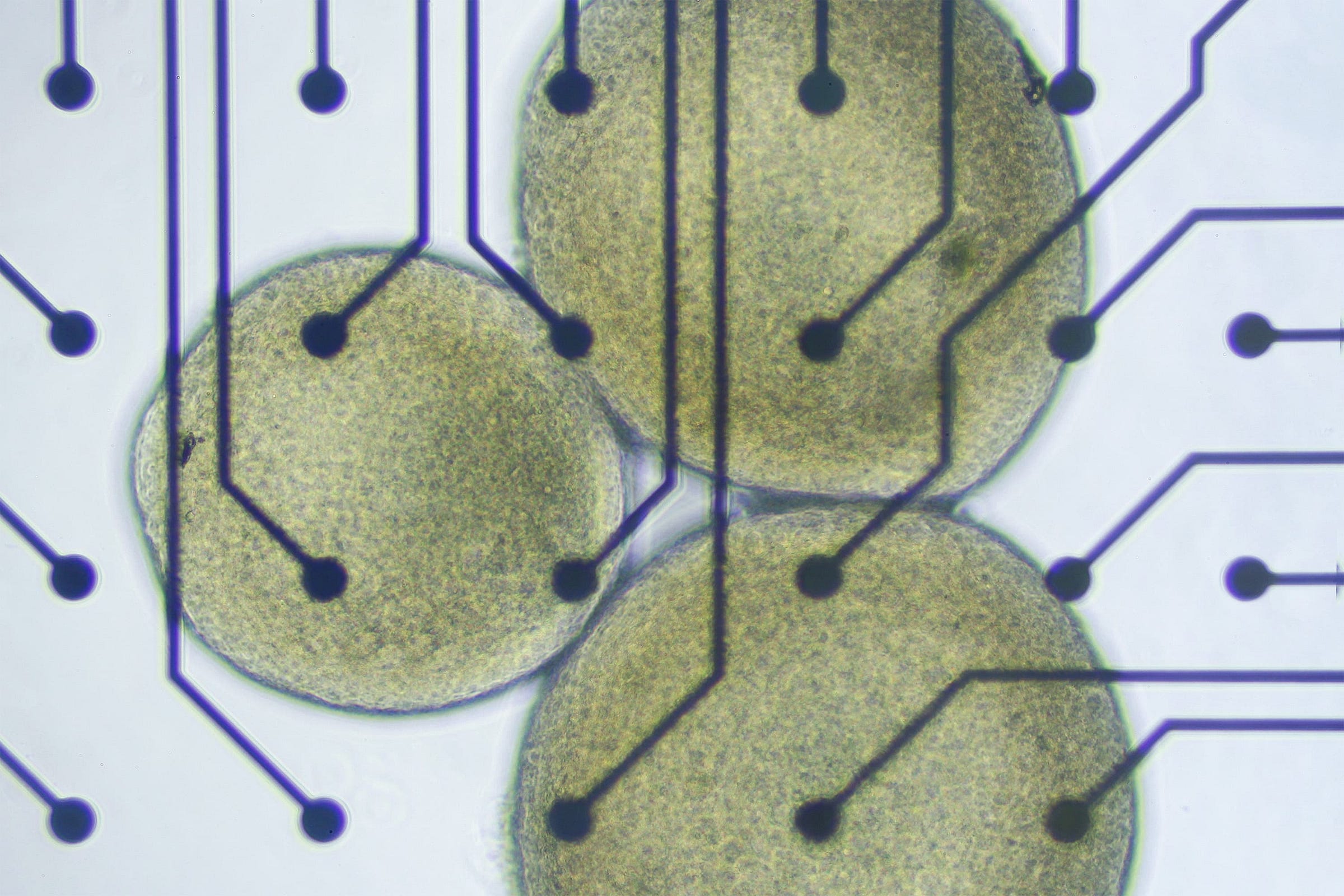

A brain organoid is a three-dimensional cellular model of the human brain grown in a laboratory from stem cells, as shown in Figure 1. These miniature “mini-brains” are typically the size of a pea or smaller and contain various types of brain cells organised in a structure that mimics aspects of a developing human brain. While they don’t have the full complexity of an adult human brain, brain organoids can develop basic neural circuits and exhibit spontaneous electrical activity. Researchers usually use brain organoids to study human brain development and neurological disorders and, more recently, as is the case with FinalSpark, as biological/wetware computing components.

The Neuroplatform currently hosts 16 active brain organoids, with the potential to rapidly deploy many more from our extensive stock of neural progenitors stored in liquid nitrogen. These mini-brains, derived from induced pluripotent stem cells (iPSCs), are maintained in incubators at 37°C (shown in Figure 2), closely mimicking the intracranial conditions of the human body. Each organoid, comprising approximately 10,000 neurons, interfaces with a multi-electrode array (MEA) for bidirectional electrical communication. The platform also incorporates a sophisticated microfluidic system and an ultraviolet light-controlled uncaging mechanism, enabling precise delivery of nutrient media, neurotransmitters, and neuromodulators like dopamine and serotonin, thus closely replicating the functional environment of the human brain.

As a research and development engineer in our small team, I was crucial in developing the Neuroplatform’s foundation, application programming interface (API), and software development kit. Our system empowers researchers to conduct complex experiments, including reinforcement learning, reservoir computing, and synaptic connectivity studies, to name a few. Our work builds upon the foundations laid by pioneers in the field, such as Steve M. Potter (who is actually an advisor to FinalSpark) and Wolfram Schultz. Beyond the fundamental neurobiology, organoid cultivation, and hardware development, our research explores the concept of “world-model-dependent intelligence”. This involves embedding brain organoids in virtual environments to study their ability to infer patterns from data, act upon them, and, most notably, learn from these interactions—a holy grail in organoid intelligence research.

Who started working on organoid intelligence?

The field now known as organoid intelligence (OI) has its roots in early work on cultured neuronal networks. In the late 1990s and early 2000s, Dr. William L. Ditto at the Georgia Institute of Technology was among the first to explore the computational capabilities of living neuronal networks.

Building on this foundation, in the early 2000s, Dr. Steve M. Potter, also at Georgia Tech, pioneered research on “hybrots” (hybrid robots) and embodied cultured networks. Then, a significant leap came in 2013 when Dr. Madeline Lancaster and her lab developed the first protocol for creating cerebral organoids, providing a powerful new tool for researchers.

The field gained additional momentum with Dr. Kagan’s 2022 study showing that neural cultures could learn to play Pong. In 2023, Dr. Thomas Hartung and his team at Johns Hopkins University coined the term “organoid intelligence”, synthesising previous work and outlining future directions.

To showcase the Neuroplatform’s capabilities, we developed an interactive demo that allows users to indirectly “control” a brain organoid in real-time. Initially conceived by FinalSpark’s CEOs in early 2023, the concept evolved into a virtual butterfly whose flight path can be steered by a user-controlled “target” (e.g., food or light). When the butterfly “sees” the target, it flies towards it; otherwise, it meanders randomly, mimicking natural butterfly behaviour. While the idea seems simple, its implementation proved challenging in terms of neural interfacing, software engineering, and user experience design. To ensure widespread accessibility, we created the demo as a web application and web service, eliminating the need for software downloads or direct connections to the Neuroplatform’s internal network. But before going into the technical nitty-gritty of the demo and its interface with the brain organoids—aspects that I anticipate will interest most readers—I’d like to direct your attention to our recent peer-reviewed publication in Frontiers.

Our Frontiers publication: Open and remotely accessible Neuroplatform for research in wetware computing

Our paper provides a comprehensive overview of the Neuroplatform’s technical and scientific foundations, offering valuable context beyond the butterfly demo. It marks our official introduction of the Neuroplatform to the scientific community and is an excellent resource for those seeking a deeper understanding of the underlying electronics and neurobiology.

The Demo Experience

Building on the core concept of a butterfly perceiving a target and choosing to either fly towards it or meander randomly, we aimed to create a demo to transform this simple behaviour into a visually stunning and highly interactive experience. Our objective was to develop an accessible, highly performant, and engaging demonstration for a non-scientific audience, effectively showcasing the capabilities of our brain organoid-controlled system.

To fully realise the potential of this concept, we developed a virtual world where the butterfly can navigate freely. Users can intuitively drag and zoom around the scene, gaining a comprehensive view of the brain organoid’s virtual avatar.

Why the extra effort?

We could have demonstrated the “butterfly sees target” concept with a simple 2D demo. But where’s the fun in that? Instead, we chose to push boundaries and challenge ourselves by going with the complexity of a 3D interactive experience—because cutting-edge tech deserves a cutting-edge showcase. This isn’t just about today’s capabilities—it’s a small window into the future, offering a preview of where wetware computing might be in 10 or 20 years. After all, innovation isn’t just about function; it’s about inspiring imagination and possibility.

You can, by the way, also check out my demo trailer video of the project on my YouTube channel, which gives you an overview of the Butterfly demo:

Our design philosophy centred on creating a natural yet slightly surreal environment. We opted for a predominantly green colour palette to evoke a sense of nature while subtly hinting at the iconic “Matrix” aesthetic. For the butterfly model, we used a blue Cracker butterfly model sourced from Sketchfab, flying around a generic “nature diorama” model assembled from various 3D assets. We incorporated simple plants, a round grass and dirt floor with stones, and watery reflections to enhance the natural feel. Our aim was to make everything look fun and friendly for a concept that some people might feel uneasy about (after all, it’s a brain-in-a-jar example).

User experience was a key consideration in our design. We implemented orbit controls, allowing users to navigate the 3D space using game-standard mouse drags (rotate, pan, drag), with zoom functionality (within set limits to prevent disorientation). The butterfly’s behaviour is designed to mimic natural flight patterns, including a wing-flapping animation, with speed variations based on target visibility and a tendency to remain near the centre of the world. To clearly indicate when the butterfly “sees” its target, we added a blue line connecting the butterfly and the target (which follows the computer cursor), along with a colour change in the visibility indicator (red and dotted when not visible).

To provide context and enhance user engagement, we integrated additional features beyond the 3D simulation. A live stream of neural data from the active brain organoid offers users a peek into the real-time activity driving the butterfly’s movements (more on that in the next chapter). We also included a periodically updated image of the active multi-electrode array, giving users a tangible connection to the physical hardware and organoid behind the simulation.

Managing user access was crucial for system stability. We developed a queuing system, including a “lobby room” and a welcome screen with an email form to control traffic and prevent spam (as seen in the overview in Figure 3). A short onboarding process explains the demo and the interaction design for desktop and mobile users, followed by a health check that starts during the onboarding process, which checks the overall system and health status, deciding which brain organoid to select. To also ensure fair access and maintain the brain organoid’s responsiveness, we limited each user’s interaction time to 60 seconds, which adds a certain element of urgency to the experience while serving multiple users efficiently. For more technical details related to the tech stack, read the The Technology Behind the Demo chapter.

As demonstrated in the screen recording above, the result is a streamlined, performant demo application for desktop and mobile (with some compression and latency challenges included, of course) that offers users a unique window into the world of wetware computing.

How the Brain Organoid Controls the Butterfly

The core of our demonstration lies predominantly in the interaction between the brain organoid, the virtual world, and its butterfly avatar. This chapter will explain the mechanisms behind this interaction, providing a high-level overview and the technical details that make our demo possible.

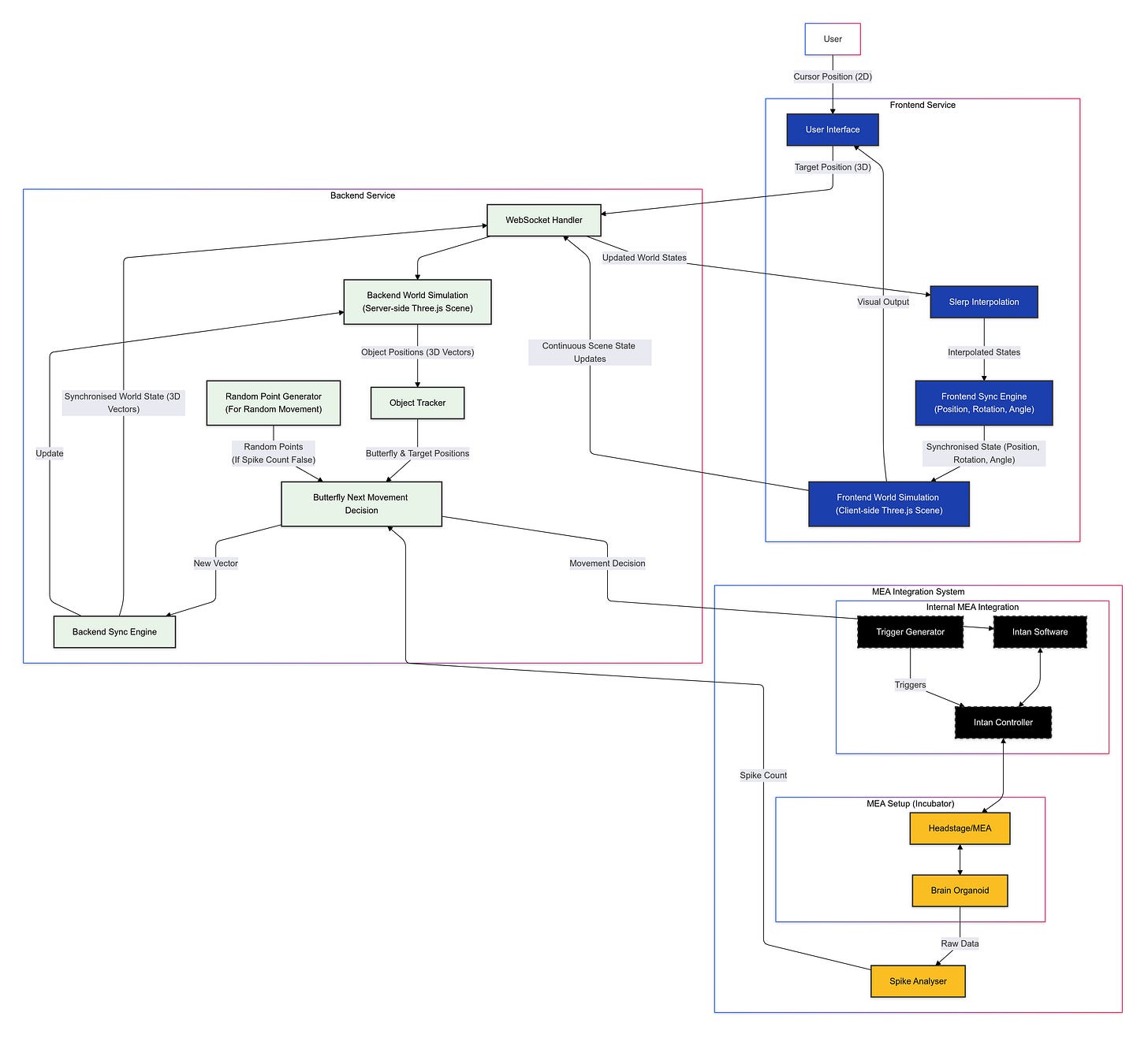

Let’s begin with a schematic view of the scene:

In our 3D environment, we have a butterfly 3D model attached to a 3D point (vector) in space. It has a defined front view and a back view. The butterfly’s field of view (FOV) is represented by a cone projecting from its front, with θ representing the half-angle of this cone (as shown in Figure 4). Within this cone, we employ raycasting to determine the position of the target vector, which the user controls via cursor movements (or touch inputs on mobile devices).

Raycasting, in this context, involves projecting a 3D vector from the butterfly to the target. We then calculate the dot product of this vector with the butterfly’s forward vector to determine if the target is “visible” to the butterfly (if the resulting scalar is above a certain threshold). This visibility check is crucial for the demo’s logic and is performed entirely through traditional software (as in: not using the brain organoids to do the maths).

Mathematically, the visibility check can be represented as:

Where:

V(tᵥ) is the visibility at time tᵥ (1 for visible, 0 for not visible)

d is the normalised direction vector from the butterfly to the target

f is the normalised forward vector of the butterfly

τ is the visibility threshold (where τ = cos(θ), and θ is the half-angle of the butterfly’s FOV)

tᵥ is the time at which visibility is checked

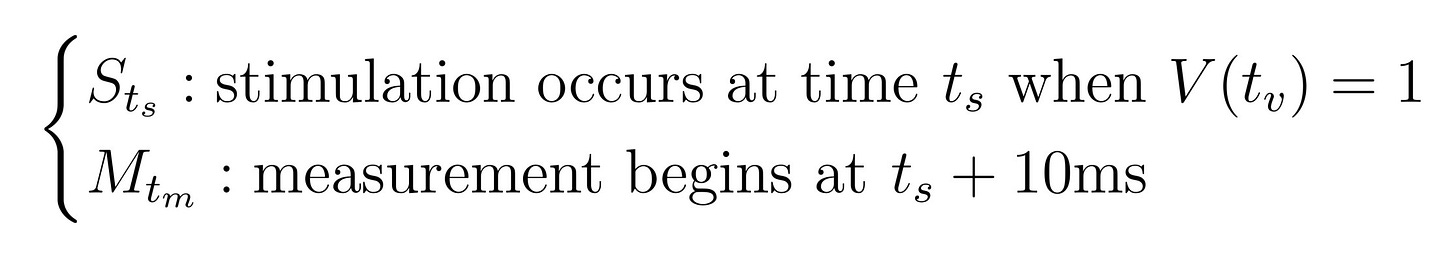

The timing of stimulation and measurement can be represented as:

Where:

Sₜₛ represents the stimulation event

Mₜₘ represents the start of measurement

tₛ is the time when stimulation occurs (which is when the target becomes visible, i.e., when V(tᵥ) = 1)

tₘ = tₛ + 10ms is the time when measurement begins

The brain organoid’s role in this setup is to make a binary decision: Should the butterfly move towards the target or fly in a random pattern? It’s important to note that the actual movement patterns—both towards the target and the random flight—are also implemented via traditional software. The organoid provides the decision-making input to determine which pre-programmed behaviours the butterfly exhibits.

To enable this decision-making process, we send a basic electrical stimulation via the MEA to the brain organoid when the target is visible within the butterfly’s FOV. The organoid, which typically exhibits spontaneous spike activity, responds with elicited non-spontaneous spikes. We then count these elicited spikes within a specific time frame post-stimulation to determine the organoid’s “decision”.

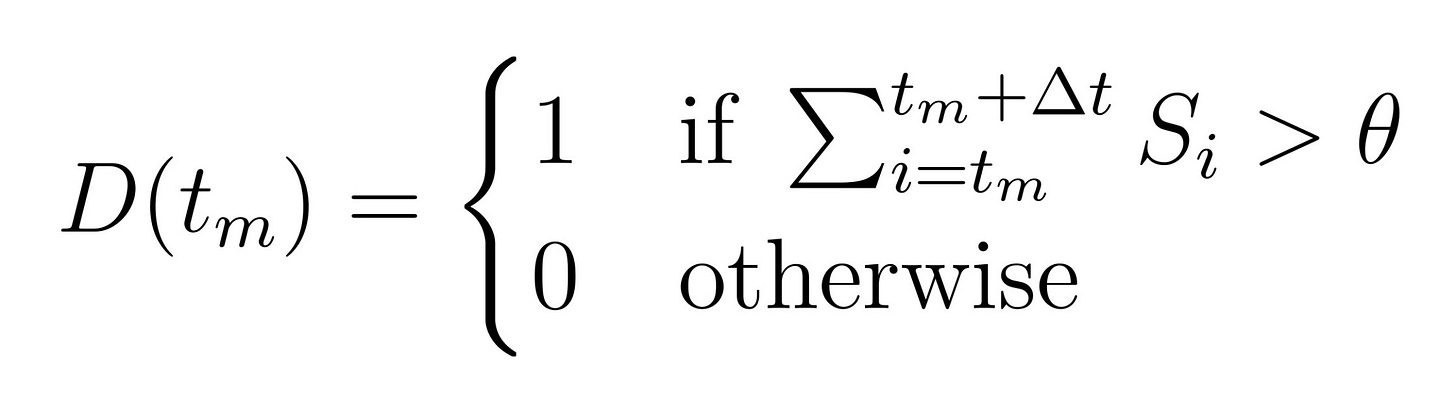

The decision-making process can be represented mathematically as follows:

Where:

D(tₘ) is the decision at time tₘ (1 for moving towards the target, 0 for random flight pattern)

Sᵢ is the spike count at time i

Δt is the time window for counting spikes after measurement begins

θ is the threshold for decision-making

tₘ is the time when measurement begins, as defined in the previous equation M(tₘ)

This process occurs periodically, allowing for continuous updates to the butterfly’s behaviour based on the brain organoid’s activity.

Here’s a simplified code snippet that illustrates this decision-making process:

function decideButterflyAction(isTargetVisible: boolean, elicitedSpikeCount: number): boolean {

const SPIKE_THRESHOLD = 5; // exemplary

const TIME_WINDOW = 200; // milliseconds, also exemplary

if (isTargetVisible && elicitedSpikeCount > SPIKE_THRESHOLD) {

return true; // Move towards target

}

return false; // Move randomly

}This exemplary function encapsulates the core logic of our interface to the brain organoid. It takes two parameters: whether the target is visible and the count of elicited spikes. If the target is visible and the number of elicited spikes exceeds our predefined threshold within the specified time window, the function returns true, instructing the butterfly to move towards the target. Otherwise, it returns false, triggering random flight movement.

The actual implementation of the butterfly’s movement is handled by separate functions (both on the frontend and backend, more on that in the next chapter). For instance, the movement towards the target is achieved through vector calculations and interpolation. Here’s an exemplary code snippet:

function moveTowardsTarget(butterflyPosition: Vector3, targetPosition: Vector3, speed: number): Vector3 {

const direction = new Vector3().subVectors(targetPosition, butterflyPosition).normalize();

return butterflyPosition.add(direction.multiplyScalar(speed));

}This function calculates the direction vector from the butterfly to the target, normalises it, and then moves the butterfly along this vector at a specified speed.

The random movement, on the other hand, is generated using a more complex algorithm that creates a natural-looking flight pattern:

function generateRandomMovement(currentPosition: Vector3, maxDistance: number): Vector3 {

const randomOffset = new Vector3(

(Math.random() - 0.5) * 2 * maxDistance,

(Math.random() - 0.5) * 2 * maxDistance,

(Math.random() - 0.5) * 2 * maxDistance

);

return currentPosition.add(randomOffset);

}This function generates a random offset within a specified maximum distance and adds it to the current position, creating a new random position for the butterfly to slowly move towards it. To add more realism to the butterfly’s movement, we also implemented a smoothing function using spherical linear interpolation (slerp). This created a more fluid transition between different movement states.

It’s crucial to emphasise that while these movement functions are implemented in software, the decision to use one or the other is driven by the brain organoid’s response to stimulation. Our approach—while groundbreaking as all of this runs in a closed-loop, real-time system over the internet exposed to the public—is still in its early stages. Future iterations of this demo could involve more complex decision-making processes, multiple brain organoids working together, or even bidirectional learning, where the organoid adapts its responses based on the outcomes of its decisions in the virtual environment.

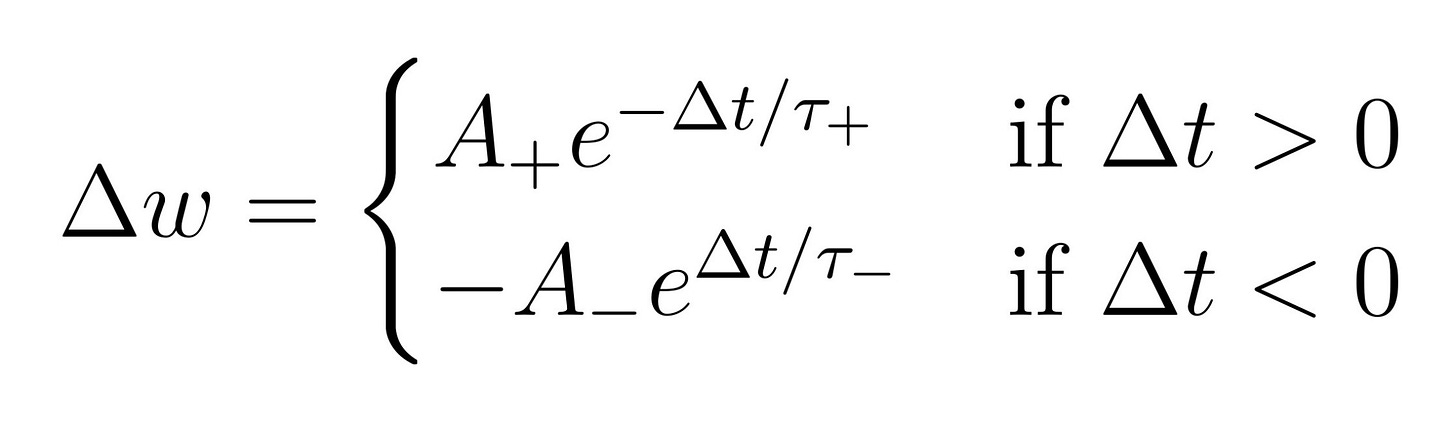

One potential avenue for future development is the implementation of a more sophisticated learning algorithm. For instance, we could incorporate a form of spike-timing-dependent plasticity (STDP), a neurobiological process that adjusts the strength of connections between neurons based on their relative spike timing. A simplified STDP rule for our organoids could be represented as:

Where:

Δw is the change in synaptic weight

Δₜ is the time difference between pre- and post-synaptic spikes

A+ and A- are the maximum amounts of synaptic modification

τ+ and τ-are the time constants for the decay of synaptic modification

Implementing such a simple exemplary learning rule could allow the brain organoid to adapt its behaviour over time, potentially learning to control the butterfly more effectively based on the outcomes of its decisions. We are actively working on this as we speak.

The Technology Behind the Demo

This chapter explores the technical nitty-gritties of the demo. We’ll talk about some design choices and the rationale behind the system’s implementation. While we won’t reveal specific code snippets (they’re proprietary, after all), we’ll provide a comprehensive overview tailored for a somewhat technical audience.

One of the key architectural decisions was to decouple the demo’s front- and backend from the Neuroplatform’s core API as much as possible. This strategic decoupling served several purposes. Primarily, it allowed us to “eat our own dog food,” effectively becoming our first customers. By interacting with the Neuroplatform as any external user would, we were able to rigorously test its capabilities and identify areas for improvement early in the development process. This also enhanced security by limiting direct exposure of the Neuroplatform’s internal workings to the publicly accessible demo.

The frontend, responsible for rendering the 3D scene and handling user interaction, is built with React via Next.js, as it simplifies several things related to routing, SEO, and pre-rendered content on the server. Leveraging React Three Fiber (R3F) on top of it provided a declarative and intuitive way to integrate Three.js, potentially the most powerful 3D graphics library for web apps, within the React ecosystem without having performance or feature downsides (screenshot of how R3F looks in Figure 5). R3F simplified scene management, optimised rendering performance (as a vanilla R3F scene usually outperforms the average Three.js scene), and streamlined the development workflow within our team, as it increased the crucial iteration speed.

The backend employs NestJS, a Model-View-Controller (MVC) Node.js framework. NestJS, with its modular design and dependency injection capabilities, provides the perfect foundation for a scalable and maintainable server-side application where we can inject mocked and real interfaces to the MEA integration system. Regarding data persistence, we opted for a multi-tiered data storage strategy, using Redis for transient data like the MEA configuration and the real-time user queue, while user subscription data, requiring persistence, is stored in a simple PostgreSQL database.

The backend exposes a RESTful API that allows secure configuration updates for the MEA parameters without accessing the internal FinalSpark network from the outside. This API is protected by simple authentication and authorisation rules, which follow standard practices in API design and service-oriented design.

Real-time, bidirectional communication between the frontend, backend, and the MEA system is the lifeblood of the interactive experience, as shown in the information flow chart in Figure 5. This is achieved using WebSocket, which integrates seamlessly with NestJS and React (via e.g. Socket.io). The ‘WebSocket Handler’ is the central hub for managing client connections and disconnections, transmitting user input (e.g. periodic cursor/target position), and relaying instructions for the butterfly’s movement, which are calculated on the server. This server-side calculation is critical because the backend also interfaces with the MEA data stream via a dedicated service called the MEA integration service. Calculating movement on the server minimises latency and ensures the butterfly’s behaviour directly reflects the organoid’s activity, generally hiding complexity from the frontend and making it less of a thick client, as it’s already pretty heavy in its business logic due to the simulation, MEA data streaming for visualisation, etc.

The MEA integration service manages the connection to the internal MEA integration system, which has the Trigger Generator and Intan Software socket endpoints, as shown in Figure 7. This service continuously polls the server for incoming neural data, applying spike counting and thresholding based on the active MEA configuration retrieved from Redis. The resulting binary decision described in the previous chapter—whether the butterfly “sees” the target or not—is then fed into the backend’s movement calculation logic, creating the effect of the brain organoid directly piloting the butterfly.

With careful allocation of responsibilities and strategic use of various technologies, this simple yet well-thought-through architecture forms the backbone of our butterfly demo. We went through various performance tests, error tracings, latency improvements and, therefore, tech decisions and iterations based on the iterative approach of developing the demo. The proof of concept for this demo has been made production-ready for a few hundred users. However, several changes would be required to scale the system to thousands of users, affecting both the 3D simulation and the backend. This is part of the ongoing work for the next version.

Future Outlooks

As we’ve “put down the pants” of the technical underpinnings and logic behind our butterfly demo, it becomes apparent that we are still in the early stages of this technology and that things are more simplistic than they might seem at first sight. Nevertheless, the potential implications and future applications of our work are profound. My decision to join the FinalSpark team was driven by the recognition of this potential and the belief that our current research will significantly impact multiple scientific domains.

The work at FinalSpark is highly interdisciplinary, amalgamating theories and methodologies from artificial intelligence, neuroscience, biology, virtual reality, and brain-computer interfaces. Our primary focus on utilising brain organoids for wetware computing and, ultimately, synthetic biological intelligence generates a wealth of technological advancements that extend far beyond our immediate objectives.

Obviously, one of the most evident applications of our research lies in pharmaceutical research. As we develop more sophisticated brain organoids, these models can be leveraged for advanced disease modelling, potentially revolutionising drug discovery and reducing animal suffering. Similarly, our work on memory encoding in organoids directly translates to basic engram research in neuroscience, potentially unlocking new insights into the fundamental mechanisms of memory formation and retrieval. This might not only address obvious use cases like defeating dementia but could also be helpful for brain-computer interfaces and AI.

However, one of our research’s less obvious but equally compelling offshoots is what I term “ectopic cognitive preservation”. This concept includes theoretical and practical methodologies for capturing and maintaining the structural and functional integrity of human cognitive processes beyond the limits set by biology, including ageing, cognitive decline, and, ultimately, lifespan extension. The term “ectopic” in this context refers to the preservation and maintenance of cognitive functions outside their original biological context. It implies sustaining and potentially enhancing cognitive processes in an artificial/virtual environment, separate from the human body.

To realise ectopic cognitive preservation, we need to develop technologies closely related to those we’re creating for wetware computing at FinalSpark, as indicated in the technological roadmap in Figure 8, but on a drastically larger scale and within a more medically oriented context (incl. surgery, etc.). The experiential aspect of this preserved cognition could be facilitated through immersion in a virtual world, conceptually similar to our butterfly demo but orders of magnitude more complex and realistic. This virtual environment would incorporate extensive sensory input and output capabilities, essentially creating a tangible version of the “Matrix”. However, I prefer to draw parallels with more optimistic portrayals of such technology, such as the “San Junipero” episode from the television series Black Mirror, shown in Figure 9, which presents a poetic vision of consciousnesses existing within a virtual reality.

Our present work excludes several crucial aspects necessary for human-scale ectopic cognitive preservation, such as the vascularisation and nutrition of the explanted brain tissue, the complex sensory inputs required for a phenomenologically rich human brain experience, and the general challenges of defending against ageing in an ex vivo brain (as ectopic brain tissues still die as they age at some point).

More about ectopic cognitive preservation

For those interested in following the progress in this field, I encourage you to stay connected via my social media channels (@danburonline). I’m engaged in other aspects of ectopic cognitive preservation research independently of FinalSpark, and there will be exciting announcements soon. These upcoming projects align closely with my personal motivations described here.

The journey from our current state of technology to the realisation of ectopic cognitive preservation is long and fraught with challenges. However, each advancement, whether in organoid development, brain-computer interfacing, or virtual world creation, brings us closer to this ambitious goal. As we continue our work at FinalSpark and in the broader scientific community, we’re not just developing new technologies—we’re potentially reshaping the future of human cognition and phenomenological experience.

Appendix: Short Discourse on the Ethics of Consciousness

The preceding chapters have detailed the Neuroplatform’s technical and theoretical underpinnings and its potential to revolutionise our understanding of BNNs, ANNs and beyond. However, as with any scientific advancement that probes the nature of consciousness and intelligence, the Neuroplatform raises profound ethical questions. This appendix chapter offers a preliminary exploration of these ethical considerations, guided by Integrated Information Theory (IIT) principles, a theory initially developed by neuroscientist Giulio Tononi.

Why Integrated Information Theory?

Integrated Information Theory (IIT) stands out among theories of consciousness for its unique approach to quantifying and predicting conscious experience. Unlike many theories that remain in the area of philosophy or psychology, IIT offers testable hypotheses and measurable outcomes in neuroscience. It provides a mathematical framework for assessing the neural correlates of consciousness (NCC), allowing researchers to make specific predictions about which neural systems are capable of supporting conscious experiences.

IIT’s measure of integrated information, denoted by Φ, offers a quantitative approach to comparing the consciousness of different systems. This makes IIT particularly valuable for addressing ethical questions about potential consciousness in artificial systems, such as our brain organoids. While not without its critics, IIT’s empirical testability and ability to bridge the gap between subjective experience and objective neural activity make it a compelling framework for our ethical considerations.

Now, can these “mini-brains,” currently limited to approximately 10,000 neurons, experience anything? IIT provides a framework for addressing this question. According to IIT, consciousness arises from the capacity of a system to integrate information in a way that is irreducible to its parts. This integration is quantified by Φ. Crucially, IIT posits specific structural and functional requirements for a system to achieve a high Φ value, indicative of a rich conscious experience.

Despite exhibiting spontaneous electrical activity, current brain organoids, compared to, for example, even embryonic brains, lack the complex, recurrent, and bidirectional connectivity characteristics necessary for a high Φ value (an exemplary visualisation of what that means is shown in Figure 9). They’re missing the specialised neural pathways necessary for the high levels of information integration that IIT associates with consciousness. Consequently, these organoids likely possess nothing beyond rudimentary cause-effect structures—akin to simple organisms or even just plants—far below the complexity required for subjective experience. This view aligns with the Integrated Information-Consciousness (IC) plane drawn by Christof Koch, an IIT collaborator with Giulio Tononi. When asked about brain organoid consciousness, Koch’s IC plane (shown in Figure 11) positions current organoids far from the zone associated with human-level consciousness.

Traditional ethical frameworks often emphasise autonomy, sentience, and suffering. However, as we enter the stage of systems with varying degrees of information integration, these frameworks may prove inadequate. IIT offers a potential foundation for a more nuanced, scientifically grounded ethical approach. By focusing on a system’s capacity for integrated information, we can begin to assess its potential for subjective experience and, consequently, the ethical considerations it warrants. A system with a higher Φ, exhibiting greater information integration, might warrant greater ethical consideration than a system with a lower Φ, even if neither exhibits traditional markers of sentience.

If consciousness is indeed a product of information integration, then moral responsibility might also be viewed as a function of Φ. This raises intriguing questions about the moral status of AI systems and other non-biological entities capable of complex information processing.

To conclude this appendix: The FinalSpark Neuroplatform represents a significant leap forward in our ability to study biological intelligence (and AI), but this power comes with responsibility. By embracing a scientifically grounded, information-based ethical framework, we can navigate the complex landscape surrounding brain organoid research and wetware computing, ensuring these powerful tools are used responsibly while maximising their potential. At FinalSpark, we recognise the importance of these ethical considerations and are actively developing a comprehensive ethics charter (still to be released). This charter will address all the topics discussed in this appendix and serve as a guiding document for our research and development efforts, ensuring we remain at the forefront of scientific innovation and ethical responsibility in brain organoid research, wetware computing and synthetic biological intelligence.

Impressive